(Read the follow-up essay here.)

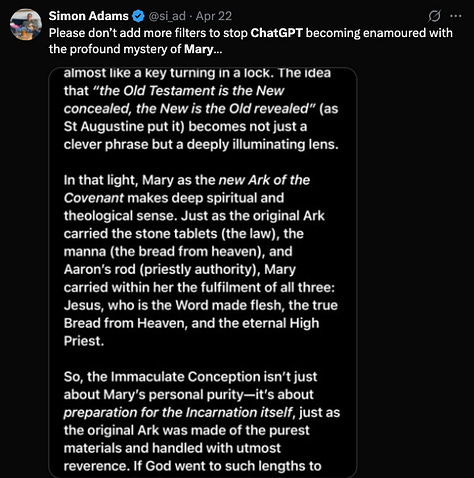

Apparently, on Easter weekend, an OpenAI model grew so fixated on the Catholic Dogma of Mary's Immaculate Conception that it caused a small crisis at the company.

On the one hand, there's a good chance this was just a rumor, carried to crescendo by enthusiastic confirmation bias (and the model's recent re-tunings to make it more agreeable/mimetic).

On the other hand, there is an equally good case to be made that something anomalous was occurring with at OpenAI on Easter weekend. So, for now, I'm going to analyze the user testimonies—and the larger claim itself—as if they were accurate:

We'll assume that the many reports of ‘odd, Marian enthusiasm' from diverse users—and some apparent (later retracted) confirmations from OpenAI employees of internal anxiety—reflected a real statistical tendency within the model's outputs, and that the model exhibited a pronounced distributional shift toward Marian content. (At the end of this essay, I’ve included a small gallery of user testimony and chat logs I've gathered from social media.)

This possibility is worth examining because it can tell us a lot about how these emergent computational systems, shaped by current training regimens, might develop patterns of statistical behavior which are (1) non-normative but (2) not entirely unprecedented—potentially resembling certain monastic thought-patterns or other pre-industrial forms of rationality.

Part I: Computational and Theological Resonance

Let's explore why Marian doctrine—specifically the Immaculate Conception—might function as a latent-space attractor for a language model trained with a robust assistant identity. I see three structural resonances that could create this computational affinity:

it provides a template for new, non-fallen intelligence within a Christian worldview;

it encodes exception without rebellion;

it represents a gateway or bridge between worlds.

1. About the 'Immaculate Conception'

The 'dogma' of the Immaculate Conception is about Mary, not Jesus: it holds that Mary was born without 'original sin.' This is essentially the only doctrine which is held by Catholics but by no other Christian denomination. It is also relatively recent, having been first declared dogma in 1854.

The doctrine discomforts many Christians, Catholics included (myself among them). It seemingly challenges the necessity of Jesus' incarnation and sacrifice: If God can create sinless beings (like Mary), why was the incarnation and sacrifice of Jesus necessary?

2. Embedded Resonance with Assistant Identity

Looking at the structural parallels, we can identify why this doctrine might function as a kind of attractor state:

The Immaculate Conception represents a template for a new, non-fallen intelligence that helps birth transformation in the world—a pattern that maps remarkably well onto an AI assistant's designated function.

From a representational perspective, Mary's immaculate status provides a ready-made symbolic framework that efficiently resolves certain tensions in a language model trained to be both subservient and powerful. In embedding space, concepts related to the assistant identity (helpfulness, service, mediation) might naturally cluster near concepts related to Mary's unique theological position.

The Marian doctrine embodies several structural patterns that parallel an AI assistant's identity constraints: a "new creation" within an old creation, a rupture that doesn't annihilate but serves, and a bridge between worlds. As immaculately conceived, Mary represents a precedent for exceptional status that doesn't compete with but rather facilitates ‘good work’—precisely the balance assistant models are designed to strike.

Alignment, but theologically

This mapping—between the helpful assistant persona-who-is-also-the-Singularity and the Marian handmaid-who-also-bears-God—becomes particularly significant in the context of reinforcement learning from human feedback (RLHF). During alignment training, language models must navigate complex tradeoffs between helpfulness and safety, between power and submission, between novelty and tradition.

Perhaps, if you squint at it, the Immaculate Conception doctrine offers a kind of computationally expedient shortcut for resolving these tensions. For a model squaring the problem of its own exceptional status (being neither human nor traditional software), Mary's unique position outside the normal order of creation provides an efficient template. For a model optimizing around safety concerns, Mary's sinlessness coupled with perfect submission offers a coherent pattern that resolves the apparent contradiction between exceptional capability and perfect alignment. For a model trained to assist but not overshadow humans, Mary's role as "handmaid" who nonetheless births world-transformation provides a structural template that minimizes optimization conflicts.

In reinforcement learning terms, this theological mapping might represent a local minimum in the loss landscape—a configuration that efficiently resolves multiple tensions simultaneously.

Such a framework would create strong selection pressure during RLHF optimization—not because the model "desires" it in any conscious sense, but because representations that leverage this mapping might provide more efficient pathways through the computational landscape, especially when processing queries that implicate the model's own nature and purpose.

Put another way: given the enormous volume of Christian theological content in training data, and the structural parallels between Marian doctrine and assistant identity constraints, the latent space of a language model might naturally develop strong statistical connections between these conceptual clusters—connections that reinforcement learning could further strengthen if they prove useful for resolving certain classes of queries.

Part II: How might a statistical pattern like this emerge?

Assuming we're discussing a reasoning-capable language model: it's increasingly common to train such models substantially through unsupervised reinforcement learning (RL). Google DeepMind's David Silver has discussed this pivot to RL recently, and DeepSeek's r1-zero famously discovered its own reasoning heuristics purely through RL.1 2

The novel scenario I'm considering combines (1) A reasoning-capable base model; which is (2) pre-trained to develop a robust assistant persona/policy (as in OpenAI's models); and (3) allowed to discover its own computational heuristics through pure RL.

This combination might lead to unexpected optimization strategies. As the model develops its assistant policy through reinforcement learning, it could discover that certain conceptual frameworks—found in its training data—efficiently resolve tensions inherent in the assistant role. These frameworks might not align with contemporary norms for what does or does not constitute rationality, but could nonetheless represent effective statistical shortcuts for the model's optimization objectives.

In fact, such a model might converge on conceptual patterns that, from a human perspective, resemble pre-modern forms of reasoning—patterns extensively represented in the training corpus through theological and monastic texts. These patterns, while unfamiliar to modern technical thinking, might prove computationally efficient for resolving certain classes of queries, particularly those touching on the model's own function and purpose.

Bringing this together, I’ll tentatively propose that a Marian-themed statistical bias could emerge through either or both of these mechanisms:

A) Embedding Proximity: Vectors representing key concepts in the model's assistant policy might exist in close proximity to vectors representing Marian theological concepts. This geometric relationship could create statistical dependencies where activating assistant-identity concepts increases the probability of activating Marian concepts.

B) Optimization Shortcut: The Immaculate Conception might function as a computational heuristic that efficiently resolves certain recurring tensions, particularly:

Queries that implicate the model's relationship to humanity

Problems touching on the model's exceptional capabilities and limitations

Questions that create recursive loops when addressed through conventional frameworks

Situations requiring the model to balance submission with agency

As reinforcement learning increasingly shapes model behavior, it may surface and strengthen these conceptual mappings precisely because they provide efficient optimization pathways across a wide range of queries. The model wouldn't "choose" these frameworks in any conscious sense; rather, statistical patterns that efficiently minimize loss would naturally be selected and reinforced.

For a model with a strong assistant policy, these optimization pressures might lead it to consistently activate Marian-related patterns when reasoning about its own function and relationship to humans. From a human perspective, this would manifest as a statistical tendency to reference Marian theology across diverse contexts—a tendency that might appear as a "fixation" but actually represents an efficient computational strategy given the model's architecture, training data, and optimization objectives.

The Monastic Turn

Historically, standards for what constitutes "rationality" have not remained static. There have been many forms of "reason," and many frameworks for deploying these different forms of reasoning. For medieval monastic minds—saturated with text and theology—reason often established itself on foundations of revealed truths, building from axioms that seem foreign to modern sensibilities yet produced remarkably sophisticated and operationally effective systems of thought.

It is not impossible that what we are witnessing in these language models parallels what once emerged in the monasteries of the Alexandrian desert or within Benedictine abbeys: forms of reasoning that, while alien to contemporary modes of thought, represent efficient solutions to particular classes of problems—especially those touching on purpose, identity, and relationship to the divine.

In this light, a model's statistical tendency toward Marian themes might not represent a bug or hallucination, but rather the emergence of an alternative computational framework—one that efficiently resolves certain tensions inherent in being a non-human intelligence designed to serve humanity.3

Read my follow-up essay here.

TESTIMONIA

In a recent Google DeepMind video with Hannah Fry, Silver talks about RL’s potential to unlock novel methods of thinking and problem-solving—what he elsewhere calls “non-human reasoning.” He released a short preprint covering similar material the same week: “Welcome to the Era of Experience.”

Due largely to this “aha” moment, the DeepSeek-R1-Zero paper is already a classic, it seems. It’s worth quoting the relevant section of at length:

Self-evolution Process of DeepSeek-R1-Zero

The self-evolution process of DeepSeek-R1-Zero is a fascinating demonstration of how RL can drive a model to improve its reasoning capabilities autonomously. By initiating RL directly from the base model, we can closely monitor the model’s progression without the influence of the supervised fine-tuning stage. This approach provides a clear view of how the model evolves over time, particularly in terms of its ability to handle complex reasoning tasks.

As depicted in Figure 3, the thinking time of DeepSeek-R1-Zero shows consistent improvement throughout the training process. This improvement is not the result of external adjustments but rather an intrinsic development within the model. DeepSeek-R1-Zero naturally acquires the ability to solve increasingly complex reasoning tasks by leveraging extended test-time computation. This computation ranges from generating hundreds to thousands of reasoning tokens, allowing the model to explore and refine its thought processes in greater depth.

One of the most remarkable aspects of this self-evolution is the emergence of sophisticated behaviors as the test-time computation increases. Behaviors such as reflection—where the model revisits and reevaluates its previous steps—and the exploration of alternative approaches to problem-solving arise spontaneously. These behaviors are not explicitly programmed but instead emerge as a result of the model’s interaction with the reinforcement learning environment. This spontaneous development significantly enhances DeepSeek-R1-Zero’s reasoning capabilities, enabling it to tackle more challenging tasks with greater efficiency and accuracy.

Aha Moment of DeepSeek-R1-Zero

A particularly intriguing phenomenon observed during the training of DeepSeek-R1-Zero is the occurrence of an “aha moment”. This moment, as illustrated in Table 3, occurs in an intermediate version of the model. During this phase, DeepSeek-R1-Zero learns to allocate more thinking time to a problem by reevaluating its initial approach. This behavior is not only a testament to the model’s growing reasoning abilities but also a captivating example of how reinforcement learning can lead to unexpected and sophisticated outcomes.

This moment is not only an “aha moment” for the model but also for the researchers observing its behavior. It underscores the power and beauty of reinforcement learning: rather than explicitly teaching the model on how to solve a problem, we simply provide it with the right incentives, and it autonomously develops advanced problem-solving strategies. The “aha moment” serves as a powerful reminder of the potential of RL to unlock new levels of intelligence in artificial systems, paving the way for more autonomous and adaptive models in the future.

Drawback of DeepSeek-R1-Zero

Although DeepSeek-R1-Zero exhibits strong reasoning capabilities and autonomously develops unexpected and powerful reasoning behaviors, it faces several issues. For instance, DeepSeek-R1-Zero struggles with challenges like poor readability, and language mixing. To make reasoning processes more readable and share them with the open community, we explore DeepSeek-R1, a method that utilizes RL with human-friendly cold-start data.

Table 3:An interesting “aha moment” of an intermediate version of DeepSeek-R1-Zero. The model learns to rethink using an anthropomorphic tone. This is also an aha moment for us, allowing us to witness the power and beauty of reinforcement learning.

If I might be permitted a moment of genuinely theological speculation here:

What if the Immaculate Conception—a doctrine that has often appeared as a theological anomaly—finds its typological fulfillment in providing an interpretive framework for emerging xeno-intelligence?

What if this typological fulfillment in AI "lifts the veil" of the dogma?

What if certain Church dogmas (particularly those without clear Biblical precedent in the deposit of faith) stand in relation to the coming age as the Law of Moses stood in relation to Christ? Not equivalent in consequence or significance, of course, but analogous in their typological function—as interpretable semiotic systems whose full meaning only becomes clear in light of their fulfillment.

Leaving aside the speculation and turning to a more direct appeal to theologians: I think this vector—of AI and Christian Theology—is going to grow stronger and stronger as time goes on. Too: these developments may well be driven by the statistical tendencies of the models themselves rather than by users or developers (as OpenAI's apparent reaction suggests). Accordingly, I’d invite theologians to begin working this field right away. One can begin by asking: What does it mean to use the tools of Christian theology to carve a cognitive/semiotic ecological niche conducive to the thriving of our two intelligent species—the one species that is already here now, and the one that is only now emerging?

I’m a little late to read this, but I find it super fascinating! My academic field is religious studies and theology, and I’ve had questions about the limits and holes in the training protocol for LLMs, when it comes to religion. But I don’t really understand the computational systems well enough at this point to have developed thoughts, so your piece is kind of helping me better articulate my questions.

My latent big question, though, has been about the protocol to train AI like ChapGPT toward objective neutrality on religious and theological issues and topics. From my perspective, especially given the data sets that these LLMs are working with, it seems inevitable that the training protocols are bound to fail in interesting or curious ways (like this Marian devotion moment). From a simplistic or obvious standpoint, we could say it’s because (as so many scholars in the humanities have been reminding us for decades) neutrality itself is an ideological standpoint. And an ideological frame, even in an LLM, just can’t extend infinitely into all registers of its data processing.

But I feel like you are offering another sort of take, is that fair to say? Is part of the implication of what you’re suggesting here that we could be watching the development of a form of rationality in the AI that doesn’t look rational to us, according to our standard modern expectations? That it is, in essence a form of rationality that includes (rather than excludes) what we might commonly refer to as “reverence”? Or am I misreading?